24-June-25

Continue learn about AWS Lambda & Provisioning Lambda Function On AWS Using Terraform

Daily Quest #7: RDS & Serverless Foundations - Part 2

AWS have service called Lambda for serverless application. Lambda can run code without provisioning or managing servers. Lambda runs your code on a high-availibilty compute infrastructure and manage all the computing resources, including servers and operating systems maintenance, capacity provisioning, automatic scaling & loging.Reference :

- https://docs.aws.amazon.com/lambda/latest/dg/welcome.html

All source code stored : https://github.com/ngurah-bagus-trisna/aws-vpc-iaac

When using lambda, you are responsible only for code. Lambda mange the compute and other resources to run your code. In this journey, i want to create lambda for reporting access to database RDS and run simple SELECT NOW();, then put to S3 bucket by serializing result to JSON.

This is a squel to yersterday's article, about RDS & Serverless Foundations. You may want to visit that article before continue

- Create bucket resource in

s3.tf

This terraform resources, provisoning bucket called nb-quest-reports and attach public_access_block to deny all acl, policy & securing access to bucket

resource "aws_s3_bucket" "nb-quest-reports" {

bucket = "nb-quest-reports"

tags = {

"Name" = "nb-quest-report"

}

}

resource "aws_s3_bucket_public_access_block" "nb-quest-deny-access" {

bucket = aws_s3_bucket.nb-quest-reports.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

- Define IAM policy for lambda, allowing to access

ssm:GetParameter, ands3:PutObject. Write intolambda.tf

First create an aws_iam_poicy called lambdaPolicy, that allows following actions :

- Put object to AWS S3 storage

- Allow get parameter from AWS System Manager

- Allow get secret from AWS Secrets Manager.

After policy created, create role with effect Allows only Lambda service can use this role. Then attach new policy to role.

resource "aws_iam_policy" "lambda-policy" {

name = "lambdaPolicy"

path = "/"

description = "Allow write to S3 and read System Parameter"

policy = jsonencode({

"Version" = "2012-10-17",

"Statement" = [

{

"Sid" = "AllowPutObject",

"Effect" = "Allow",

"Action" = [

"s3:PutObject"

],

"Resource" = [

"*"

]

},

{

"Sid" = "AllowGetParameterSSM",

"Effect" = "Allow",

"Action" = [

"ssm:GetParameter"

],

"Resource" = [

"*"

]

},

{

"Sid" = "AllowGetSecretValue",

"Effect" = "Allow",

"Action" = [

"secretsmanager:GetSecretValue"

],

"Resource" = [

"*"

]

},

{

"Sid": "AllowManageENI",

"Effect": "Allow",

"Action": [

"ec2:CreateNetworkInterface",

"ec2:DescribeNetworkInterfaces",

"ec2:DeleteNetworkInterface"

],

"Resource": "*"

},

{

"Sid": "AllowCloudWatchLogs",

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

}

]

}

)

}

resource "aws_iam_role" "reporting_lambda_role" {

depends_on = [aws_iam_policy.lambda-policy]

name = "ReportingLambdaRole"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow"

Principal = {

Service = "lambda.amazonaws.com"

},

Action = "sts:AssumeRole"

}

]

})

}

resource "aws_iam_role_policy_attachment" "lambda_policy_attachment" {

depends_on = [

aws_iam_policy.lambda-policy,

aws_iam_role.reporting_lambda_role

]

role = aws_iam_role.reporting_lambda_role.name

policy_arn = aws_iam_policy.lambda-policy.arn

}

resource "aws_lambda_function" "db_to_s3_lambda" {

depends_on = [

aws_db_instance.nb-db,

aws_s3_bucket.nb-quest-reports,

aws_iam_role_policy_attachment.lambda_policy_attachment

]

function_name = "dbToS3Lambda"

handler = "app.lambda_handler"

runtime = "python3.12"

filename = "${path.module}/lambda.zip"

role = aws_iam_role.reporting_lambda_role.arn

source_code_hash = filebase64sha256("${path.module}/lambda.zip")

timeout = 10

vpc_config {

subnet_ids = [aws_subnet.nb-subnet["private-net-1"].id]

security_group_ids = [aws_security_group.rds-sg.id, aws_security_group.web-sg.id]

}

environment {

variables = {

SECRET_NAME = aws_db_instance.nb-db.master_user_secret[0].secret_arn

BUCKET_NAME = aws_s3_bucket.nb-quest-reports.id

DB_HOST = aws_db_instance.nb-db.address

DB_USER = aws_db_instance.nb-db.username

DB_NAME = aws_db_instance.nb-db.db_name

}

}

}

- Create Lambda function.

In this section i use python to create function with the following action :

- Read DB credentials (from Env / SSM / Secrets Manager)

- Connect to the RDS endpoint

- Run a simple

SELECT NOW();query - Serialize the result to JSON and

PUTit into your S3 bucket

import boto3

import pymysql

import os

import json

import time

from datetime import datetime

from botocore.exceptions import ClientError

def lambda_handler(event, context):

print("🚀 Lambda started.")

# Step 1: Load env vars

try:

secret_name = os.environ['SECRET_NAME']

bucket_name = os.environ['BUCKET_NAME']

db_host = os.environ['DB_HOST']

db_user = os.environ['DB_USER']

db_name = os.environ['DB_NAME']

print(f"✅ Env vars loaded:\n DB_HOST={db_host}\n DB_USER={db_user}\n DB_NAME={db_name}\n BUCKET={bucket_name}")

except KeyError as e:

print(f"❌ Missing environment variable: {str(e)}")

return {"statusCode": 500, "body": "Missing environment variable"}

# Step 2: Get DB password from Secrets Manager

try:

secrets_client = boto3.client('secretsmanager')

print("🔍 Fetching password from Secrets Manager...")

start_time = time.time()

secret_value = secrets_client.get_secret_value(SecretId=secret_name)

password = json.loads(secret_value['SecretString'])['password']

print(f"✅ Password fetched in {round(time.time() - start_time, 2)}s")

except ClientError as e:

print(f"❌ Failed to get secret: {e.response['Error']['Message']}")

return {"statusCode": 500, "body": "Failed to get DB password"}

except Exception as e:

print(f"❌ Unexpected error getting secret: {str(e)}")

return {"statusCode": 500, "body": "Error while retrieving secret"}

# Step 3: Connect to DB

try:

print("🔌 Connecting to DB...")

start_time = time.time()

conn = pymysql.connect(

host=db_host,

user=db_user,

password=password,

db=db_name,

connect_timeout=5

)

print(f"✅ Connected to DB in {round(time.time() - start_time, 2)}s")

except Exception as e:

print(f"❌ DB connection failed: {str(e)}")

return {"statusCode": 500, "body": "Failed to connect to database"}

# Step 4: Run SELECT NOW()

try:

print("📡 Executing query...")

start_time = time.time()

with conn.cursor() as cursor:

cursor.execute("SELECT NOW();")

result = cursor.fetchone()

conn.close()

print(f"✅ Query result: {result[0]} in {round(time.time() - start_time, 2)}s")

except Exception as e:

print(f"❌ Query failed: {str(e)}")

return {"statusCode": 500, "body": "DB query failed"}

# Step 5: Upload to S3

try:

print("☁️ Uploading to S3...")

start_time = time.time()

s3_client = boto3.client('s3')

payload = json.dumps({"timestamp": str(result[0])})

filename = f"timestamp_{datetime.utcnow().isoformat()}.json"

s3_client.put_object(

Bucket=bucket_name,

Key=filename,

Body=payload

)

print(f"✅ Uploaded to S3: {filename} in {round(time.time() - start_time, 2)}s")

except Exception as e:

print(f"❌ Failed to upload to S3: {str(e)}")

return {"statusCode": 500, "body": "S3 upload failed"}

return {

"statusCode": 200,

"body": f"Saved to S3 as {filename}"

}

- Plan + Provisioning to AWS

tofu plan

tofu apply

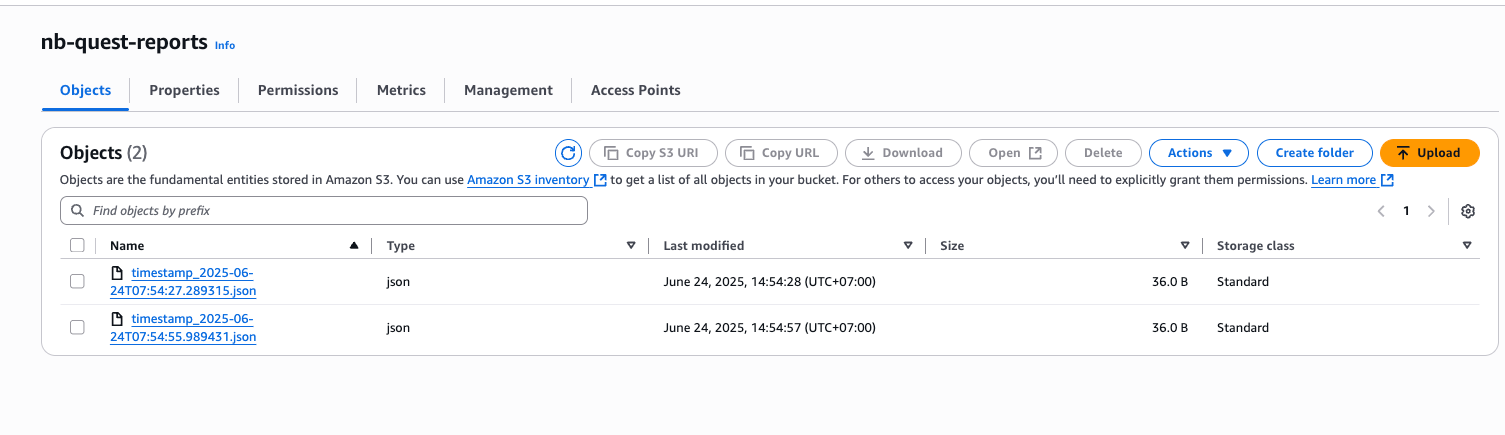

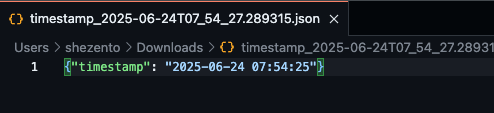

Result

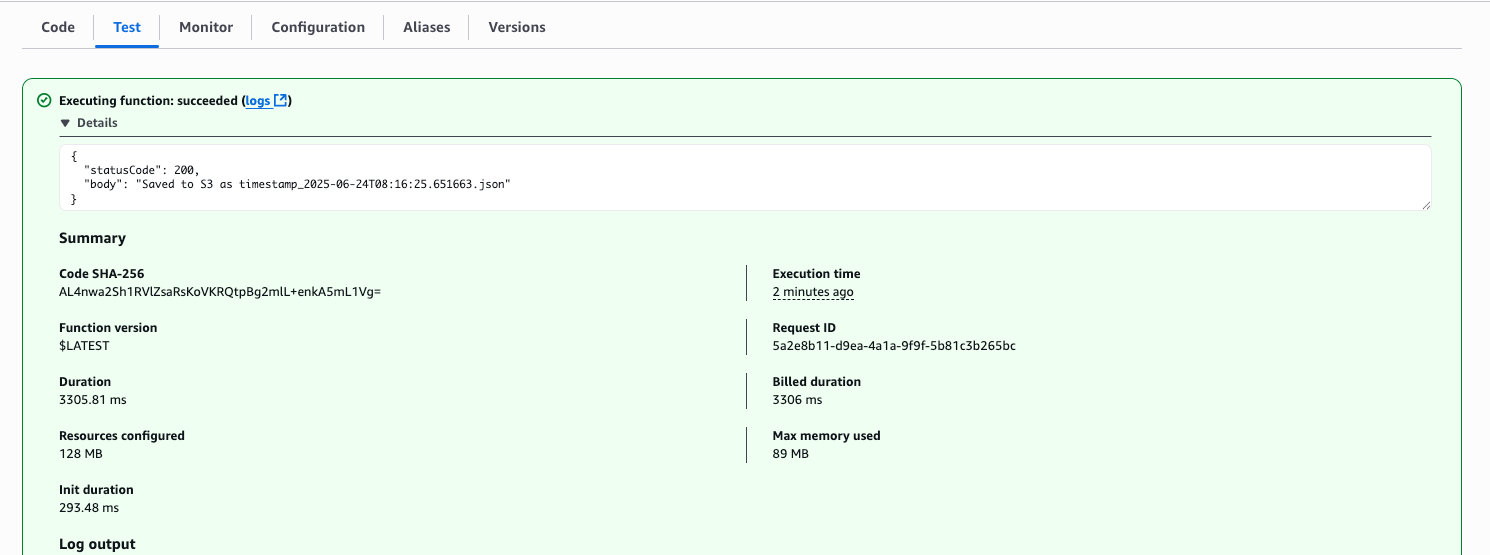

Test in AWS Lambda dashboard

Done fetch data from DB to S3 Storage